AI in Healthcare

Evolution, Adoption, Risks, and the Road Ahead

Recently, I had the privilege of presenting on this topic alongside Prof. G-J van Rooyen to an audience of doctors, health specialists, and members of the ExCo of Mediclinic. I decided to expand our presentation into this long-form article to share our learnings with a broader audience. The evolving role of AI in healthcare is a fascinating story, marked by bold promises, disappointments, incredible advancements, and emerging pitfalls.

AI Has Taken the World by Storm - But It's Not Only ChatGPT

AI exploded into mainstream consciousness with tools like ChatGPT, but AI is far more expansive than the current LLM hype. AI is a suite of technologies that have been evolving for decades, each with unique applications in healthcare.

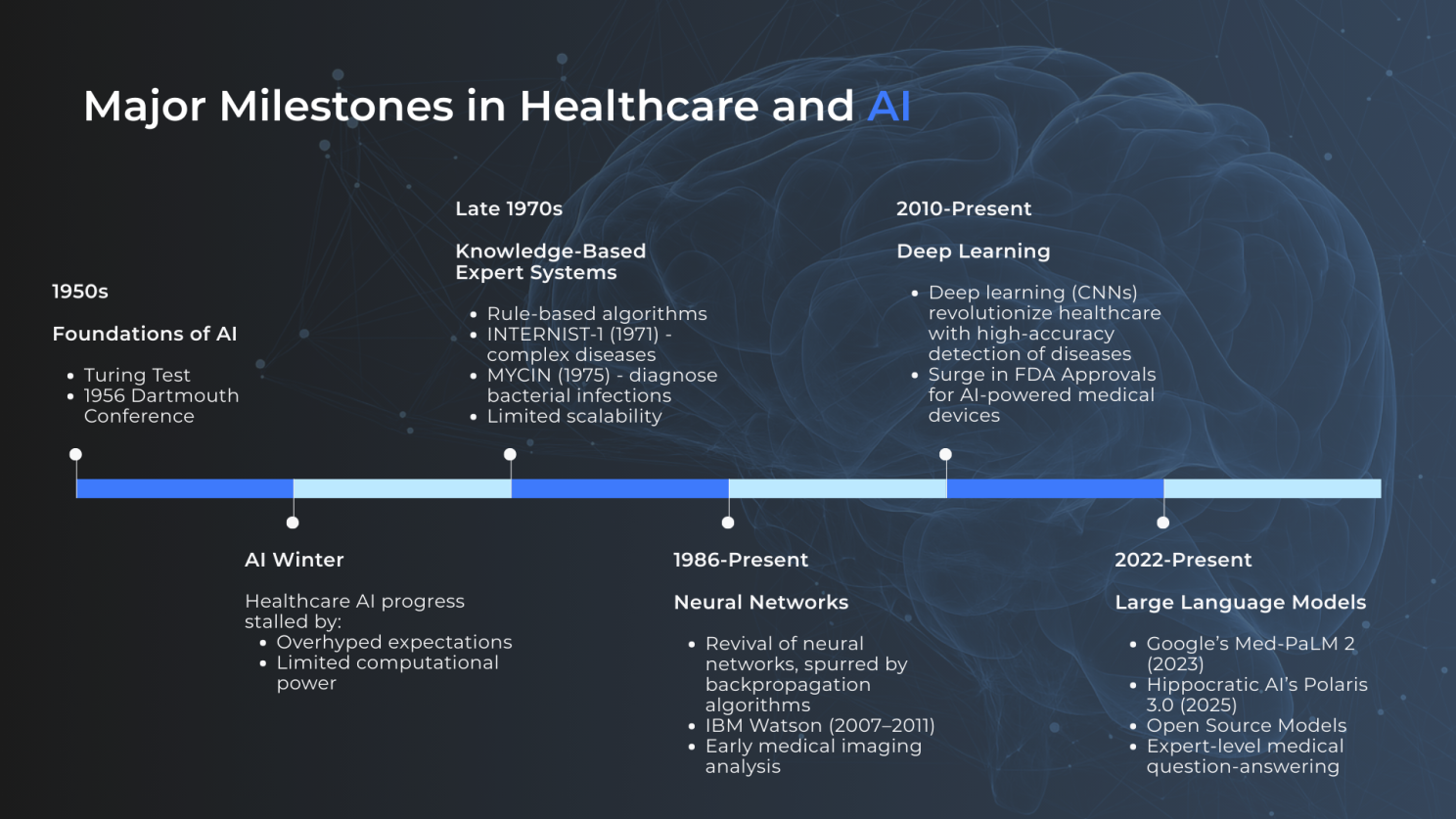

Major Milestones: From Great Expectations to Modern Breakthroughs

AI's journey in healthcare is a cyclic story of hype, disillusionment, and breakthroughs driven by advancements in processing power. This evolution began with the 1956 Dartmouth Summer Research Project, widely regarded as the birthplace of modern AI. This two-month summer workshop brought together about 10 leading scientists to explore the potential of machines simulating human intelligence, igniting great expectations for the field. But this initial exuberance was followed by an "AI Winter", where overhyped promises collided with limited computational power, stalling progress.

The thaw came with knowledge-based expert systems, which are computationally efficient rule-based AI that mimic human decision-making through predefined if-then rules and knowledge bases. These systems are particularly beneficial in specific domains, such as medical diagnosis, where structured logic can guide outcomes.

By 1986, neural networks revived interest in AI. These are AI models inspired by the human brain, consisting of interconnected nodes (neurons) organised in layers that learn patterns from data during training. This saw the birth of early medical image recognition, where a computer could scan X-rays for abnormalities.

Further advances in processing power in the 2010s brought deep learning, a sophisticated extension of neural networks, with convolutional neural networks (CNNs), enabling more advanced medical image analysis and disease detection. This era saw a surge in FDA approvals for AI-powered medical devices. Deep learning has, for example, revolutionised the screening of skin cancer, where machines with cameras are routinely used to rapidly scan and classify every mole or abnormality on your body within minutes, flagging high-risk areas and guiding a doctor to focus on areas of concern.

Today, from 2022 onward, large language models (LLMs) or "GenAI" dominate headlines. These are advanced neural networks trained on vast text datasets to understand, generate, and process human-like language. LLMs are particularly well-suited to provide a natural way for humans to interact with machines, and can mimic a human clinician’s curiosity, empathy, and knowledge. GenAI has opened the door for direct AI-to-patient interactions.

How GenAI Transformed Software Development - Lessons for Healthcare

To contextualise AI's impact in healthcare, consider its role in software development, where engineers have been avid early adopters. Stack Overflow's 2025 AI survey reveals that 84% of respondents currently use or plan to use AI tools in their development process, resulting in a global surge in code-related AI usage.

Notably, AI isn't replacing engineers; it requires human oversight for quality and innovation. Instead, it boosts productivity by 20-30%. The role of software engineers is shifting toward architecture, prompt engineering, and ethics. This mirrors healthcare, where AI currently augments professionals, boosting productivity, but not replacing human judgment and accountability.

Current Adoption: From Scepticism to Enthusiasm

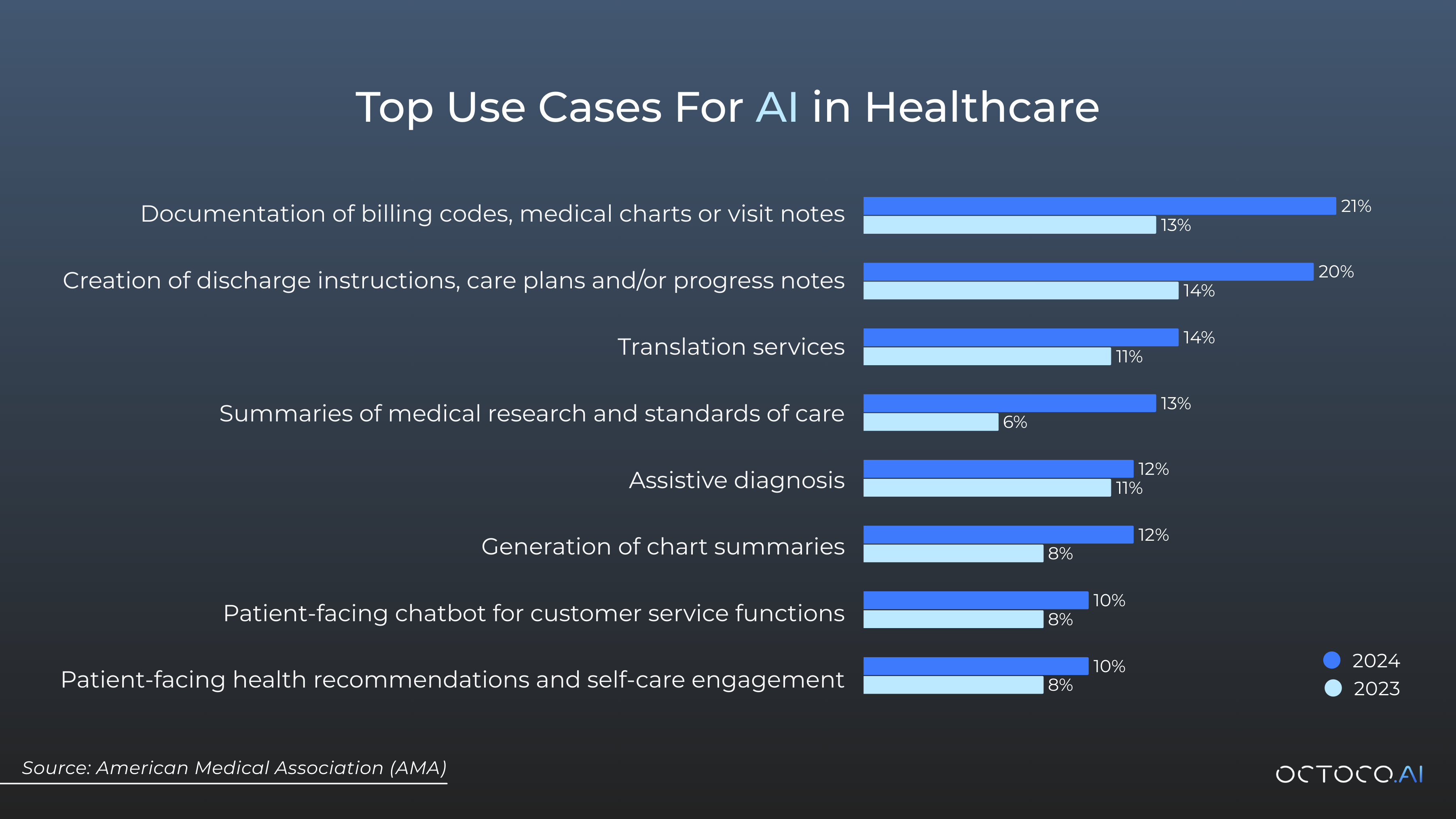

A recent American Medical Association (AMA) survey reveals some interesting insights: 66% of physicians are already using health AI, up from 38% in 2023. Enthusiasm is growing, with 68% of respondents seeing advantages, and 35% are more excited about AI than concerned.

GenAI is currently primarily used for non-clinical administrative purposes (e.g. billing codes, translation, and summaries). Clinical use (e.g. supporting triage, disease detection, and assistive diagnosis) is more limited, but rapidly growing.

This rapid uptake signals AI's integration into daily workflows, but it's not without challenges.

Giving Patients The Choice: Informed Consent

Clinicians and practices that currently process sensitive patient information with AI are increasingly using informed consent with their patients as part of their onboarding process. It is clearly explained to patients how clinicians will use their information and the measures taken to protect sensitive information. Patients can then choose to opt out of AI assistive tools being used.

Risks: Privacy, Hallucinations, and Direct Patient Interactions

While adoption surges, risks loom large. Privacy concerns top the list: Doctors might inadvertently share sensitive information (like patient names) in LLM chats, risking data breaches. Providing healthcare professionals with training on AI tool selection and responsible use is essential.

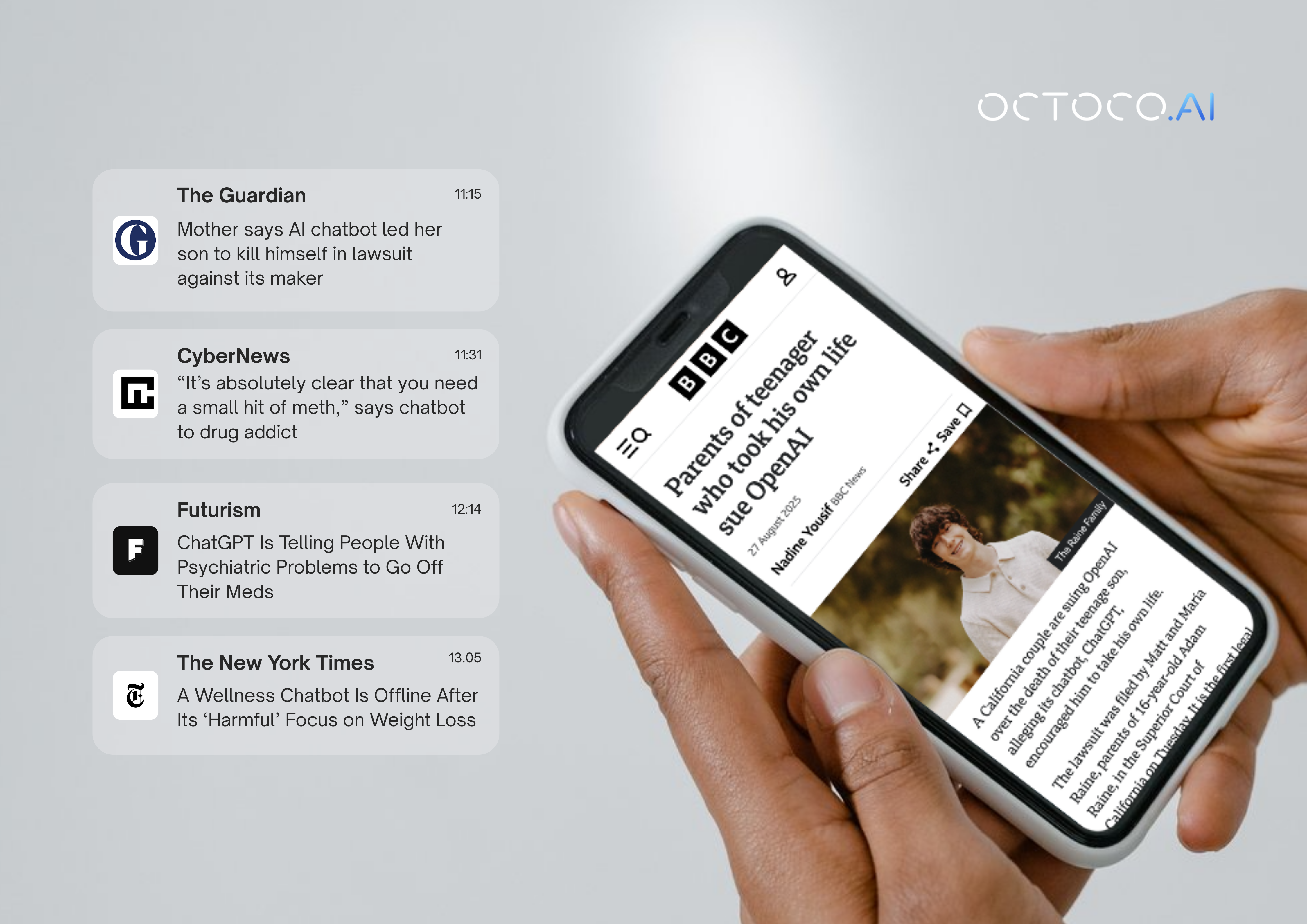

Direct LLM-to-patient interactions pose another hazard, often a combination of LLM's two significant flaws: LLM's eagerness to please the user regardless of the context, and "hallucinations" where AIs generate plausible but incorrect responses.

These examples underscore why direct AI-to-patient interactions currently pose significant risks.

Quick Wins: Implementing AI Today

For hospitals and practices, AI offers immediate value without overhauling systems:

- Administrative Boosts: Use AI for transcriptions, patient onboarding, and stock predictions to enhance productivity.

- Knowledge Bases: Implement LLMs with Retrieval-Augmented Generation (RAG) to provide "ground truth" for standard operating procedures, reducing (though not eliminating) hallucinations. This provides a powerful tool for staff to access and ask questions about administrative procedures and policies.

- Clinical Assistance: Utilize AI as a diagnostic support tool, with careful selection, robust privacy safeguards, oversight, patient consent, and regular training.

These applications can yield quick ROI while building familiarity.

Will LLMs Ever Beat Human Doctors?

A March 2025 meta-analysis in Nature pitted popular LLMs like ChatGPT against human doctors to compare diagnosis accuracy. Amazingly, the researchers found that generative AI models achieved an overall accuracy of 52.1%, comparable to non-expert physicians. Have LLMs finally managed to match human performance? Not so fast - the same analysis found that expert physicians still beat LLMs in diagnosis by 15.8%.

There is considerable speculation among AI researchers that LLM performance is approaching a brick wall, with gains saturating and flattening at human-expert levels. GPT-5, for example, was widely criticised, with many users and commentators describing it as overhyped and a letdown compared to expectations set by OpenAI.

If there is a brick wall, companies like Hippocratic AI, founded in 2023 with $278 million in funding, may be breaking through. The company claims that its Polaris 3.0 LLM achieves 99.38% clinical accuracy, surpassing human performance. Polaris 3.0 is one of the first GenAI tools to provide direct AI-to-patient care, offering various AI agents for services such as audio-based patient recovery interviews to probe for adverse reactions and schedule follow-ups.

The Regulatory Brick Wall: Can AI Overcome It?

Regulators may be the ultimate gatekeepers. How accurate does AI need to be to be accepted by regulators? Must AI accuracy be equivalent to an expert physician? Or must it be better than an expert, and if so, by how much? It would seem that regulators are taking a cautious approach and may have set the regulatory hurdles (particularly around marketing authorization) very high indeed.

Take Woebot, an AI chatbot for mental health support using Cognitive Behavioural Therapy principles. Available 24/7, it offered mood tracking, exercises, and conversations, backed by research showing a reduction in depressive symptoms. Despite acclaim and awards, it discontinued its app on June 30, 2025, due to FDA regulatory challenges.

This case study highlights hurdles: Ensuring safety, efficacy, and ethical use in unsupervised settings. While AI excels as an assistive tool, direct patient interactions face major scrutiny.

Summary and Conclusion: AI as a Tool, Not a Silver Bullet

AI in healthcare extends far beyond the hype of GenAI. It's not yet a silver bullet - direct AI-to-patient care isn't mature, and regulatory barriers persist. However, it delivers significant productivity gains for hospitals and practices. Clinicians are rapidly adopting it as an assistive tool, but it doesn't replace judgment or accountability.